Welcome to the

Inertial Systems and Sensor Fusion Lab

The Inertial Systems and Sensor Fusion Lab results

from a collaboration of the Divisions of Aeronautical and Electronics

Engineering at ITA, and conducts research and development of algorithms

and subsystems for inertial navigation aided by sensor fusion,

guidance, estimation and control of dynamic systems. Historically, the

lab is a continuation and deepening of the scope and objectives of the

early Laboratory of Computer Vision and Active Perception, which in the

1990s developed at ITA a project with support of state and federal

funding agencies, respectively FAPESP and CAPES, in the area of Active

Computer Vision using an antropomorphic head equipped with stereoscopic vision

. The lab has been funded by Project

FINEP-DCTA-INPE/Inertial Systems for Aerospace Apllication (Project

SIA), Project CNPq-FAPESP/INCT Space Sciences, and Project

FINEP-UnB-ITA-XMobots/Development of a Mini-UAV with a

Gyrostabilized Imaging Pod. The results in the areas of estimation and

control of dynamic systems, navigation, guidance, and computer vision

have been applied to university satellites and UAVs.

The lab has integrated and tested

on the ground and in flight gyrostabilized imaging pods and sensor

fusion algorithms for inertial navigation aided by GPS, altimeter, and

magnetometer, and georeferenced pointing. The lab has developed the

embedded softwarerunning on board the imaging pod for real-time

navigation, attitude estimation, and control of gyrostabilization and

georeferenced pointing. More recently, the lab has directed efforts

towards developing and testing computer vision-aided inertial

navigation in real time to provide a robust solution in scenarios where

jamming results in GPS outage.

[Scope and objectives][Active vision head] [History]

[Course work] [Equipment]

[Publications] [Staff and collaborators]

[Photos/Videos]

Scope and objectives:

Inertial navigation aims at

the estimation of position, velocity, and often attitude of a vehicle

from inertial measurements acquired on board, that is, specific force

and angular rate with respect to an inertial reference frame provided,

respectively, by accelerometers and rate-gyros assembled within an

inertial measurement unit (IMU). Inertial navigation has the advantage

of autonomy with respect to external aiding. However, sophisticated,

expensive sensors are called for to arrest the navigation errors within

the acceptable limits for inertial navigation, often considered

to be in the order of one nautical mile per hour of operation. The use

of low-cost inertial sensors severely degrades accuracy to a much

poorer level and thus additional sensors are required to reach the

acceptable error limits previously mentioned. Hence, the inertial

navigation system (INS) aided by sensor fusion becomes dependent on

aiding sensors that compromise the previous autonomy since such aiding

sensors are subject to external disturbances, or intentional jamming.

Navigation aiding via sensor fusion arises from the statistical

processing of signals from GPS receivers, magnetometers,

baro-altimeters, and analog or digital video cameras.

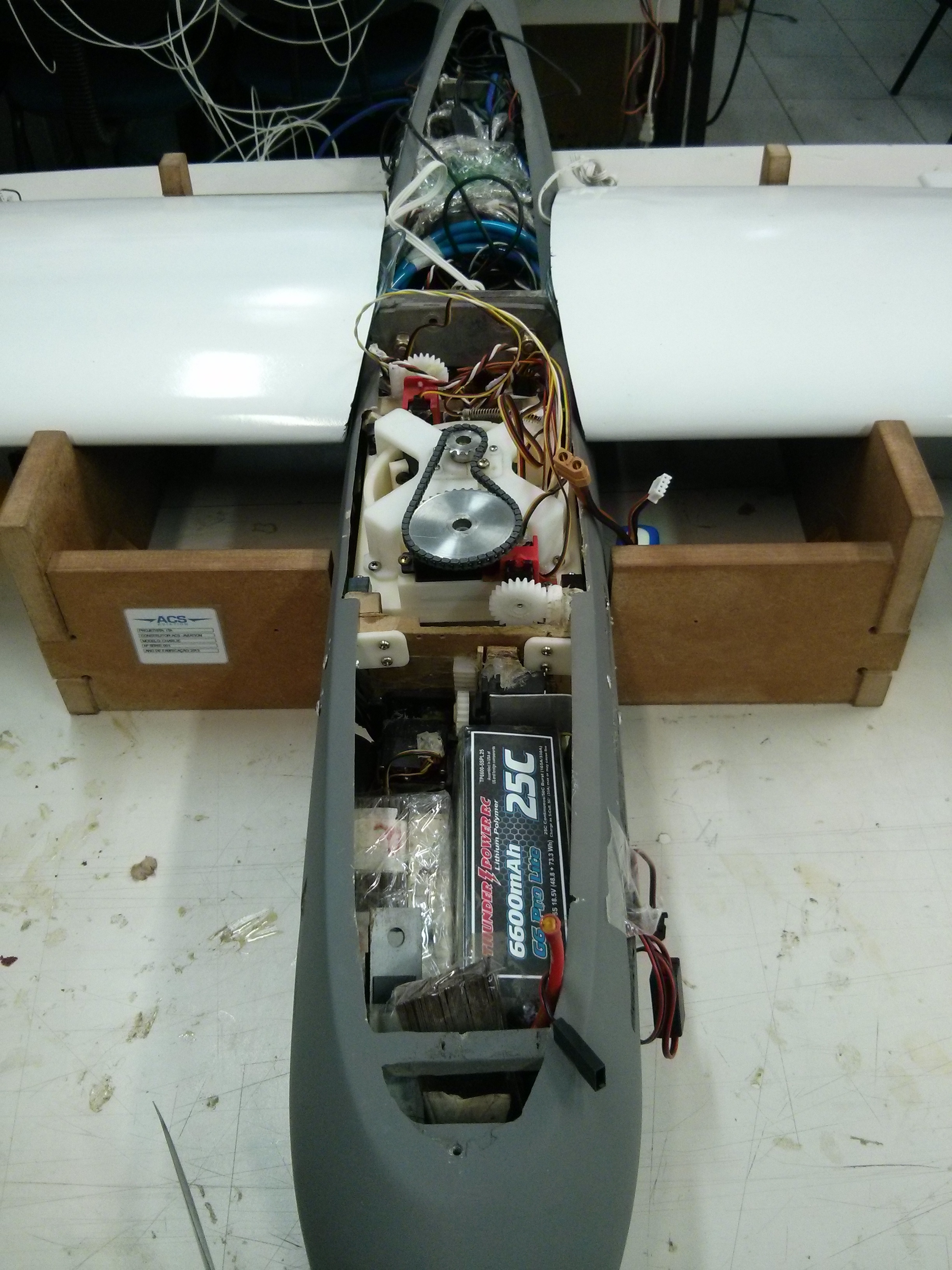

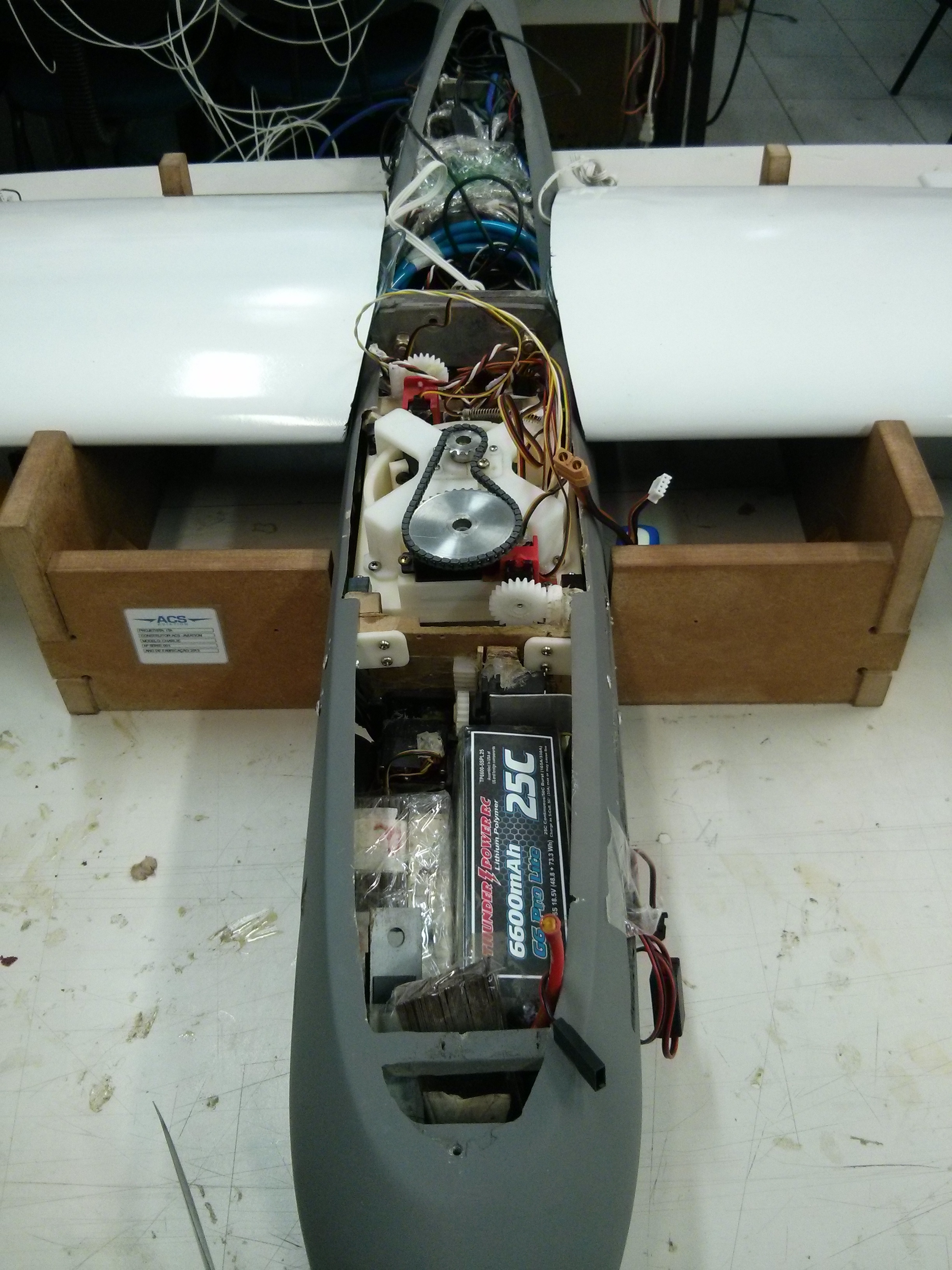

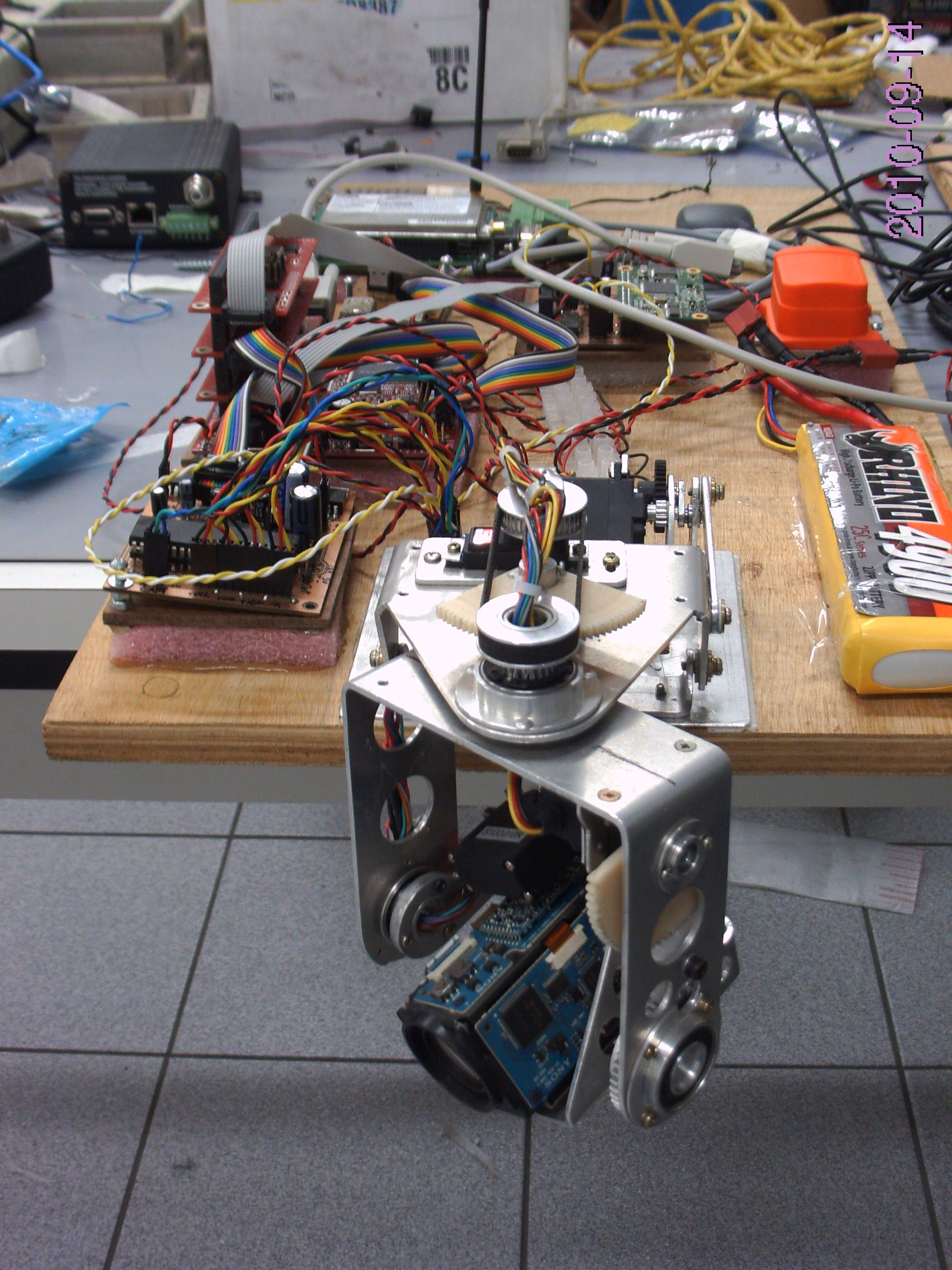

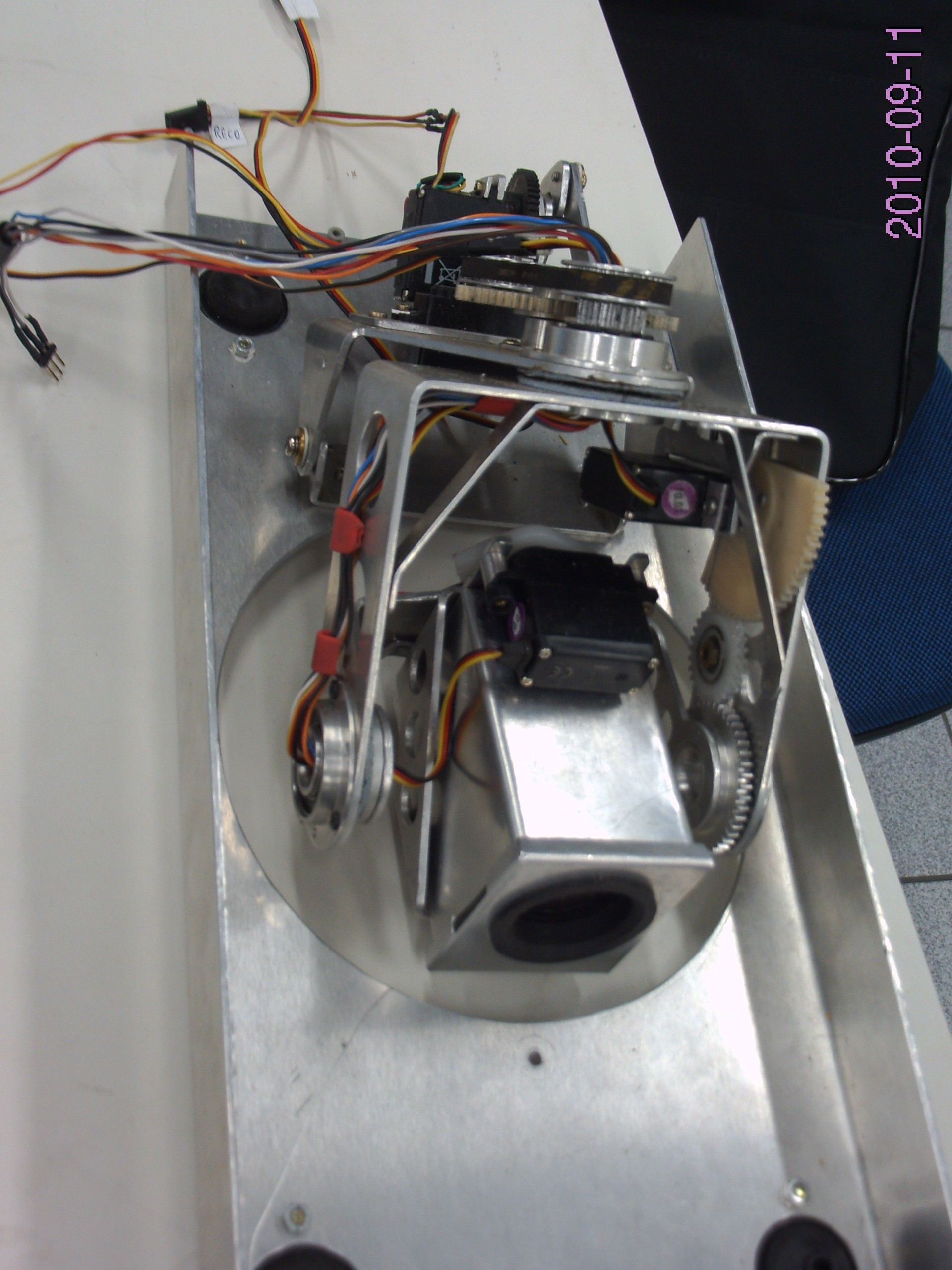

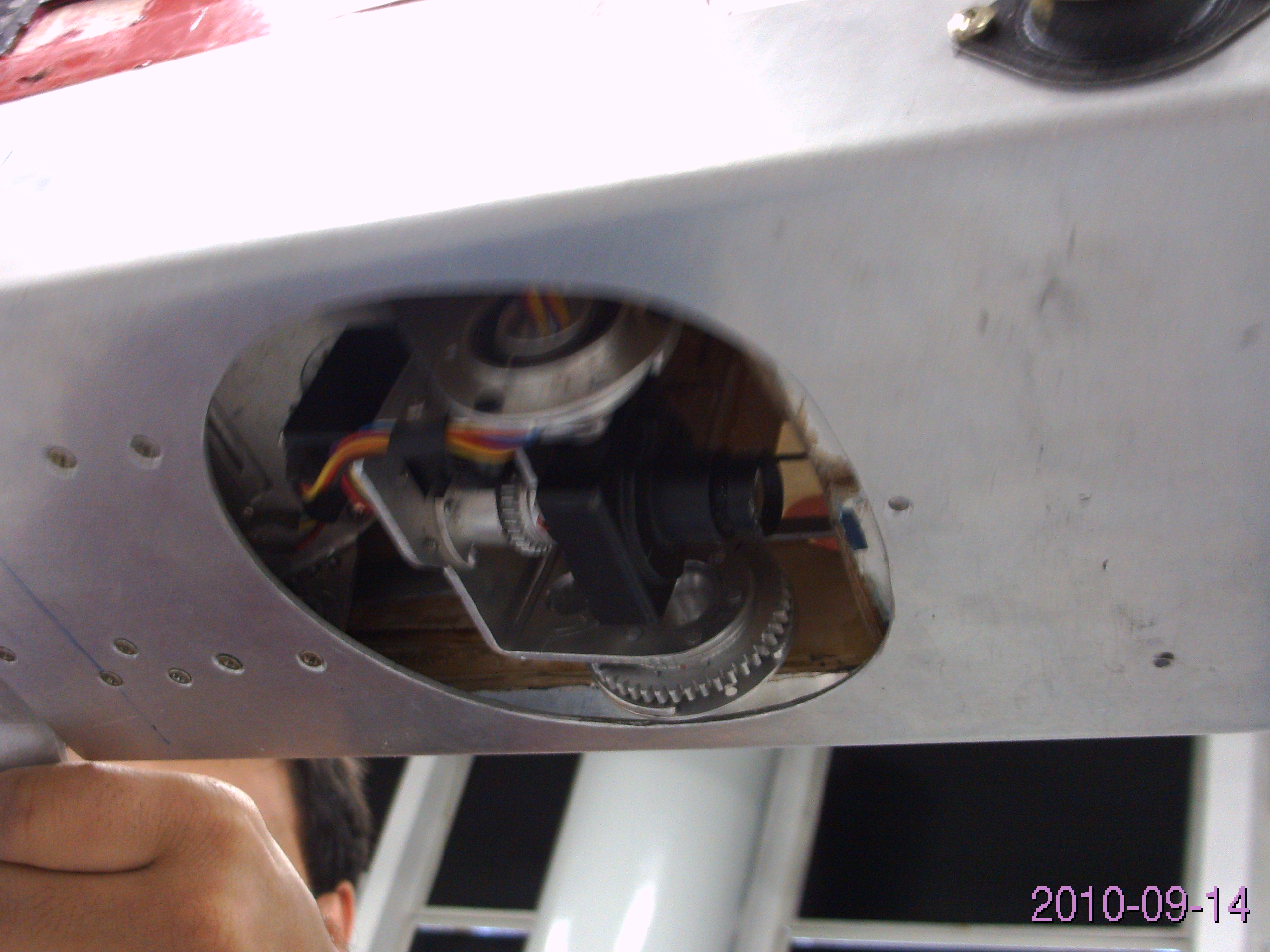

More recently, the staff and collaborators in the Inertial Systems and Sensor Fusion Lab have

conceived, designed, developed, integrated, and tested various imaging

pod prototypes and the embedded software for real-time control that

provides the capabilities of aided inertial navigation,

gyrostabilization, and georeferenced pointing. Most of the mechanical

parts in the latter versions of the imaging pod are now prototyped in

the lab with 3D printing.

A more detailed consideration

of the potential advantages of sensor fusion for inertial navigation

aiding favors the use of computer vision to provide robustness to GPS

outage. Vision is among the most powerful senses available.

Multidisciplinary research involving physics, optics, estimation and

control of dynamic systems, as well as neurophysiology, psychology, and

psychophysics provide the knowledge required for modeling and

constructing computational theories to describe the visual process, and

for conceiving and implementing systems that attempt to emulate the

human visual apparatus. Vision provides information about the pose

(position and attitude) and properties of observed objects and their

mutual relationships, their surroundings, and the observer's motion.

Visual information extracted via

computer vision algorithms, the mathematical property of observability

in dynamic systems, and the real-time implementation of embedded

algorithms for the statistical fusion of measurement data from a

variety of sensors on board allow one to tackle the problem of

navigation with small-sized vehicles during GPS outage and the

estimation of the observed three-dimensional (3D) structure. One most

motivating challenge in the lab is to successfully meet the technical

requirements of real-time sensor fusion for accurate navigation in

spite of limited resources. The lab is equipped with sensors and autopilots, a commercial

airmodel frame, and electrically powered sailplanes conceived, designed

and prototyped in the lab. A bicycle was also equipped for a proof of

concept of algorithms and subsystems under realistic operation

conditions. Flight tests occur when a NOTAM is issued by SRPV-SP (the

Aeronautical Authority in charge of controlling the access to Brazilian

airspace in the State of São Paulo).

Antropomorphic head with stereoscopic cameras and asymmetric vergence for experiments with active vision (1995-1999)

The

antropomorphic head counted on DC motors to rotate the pan, tilt,

asymmetric vergence axes. Two controller units encompassing logic and

power circuits for PID control and PWM driving, respectively, were the

interface for sending command signals to the head. Imaging was carried

out with CCD cameras with servocontrolled lenses wherein aperture,

focus, and zoom were adjusted according to the requirements of the

visual task. (TRAEDV-N.MPG

- 2.6MB).

The lab, at the time, had the following equipment::

- 2 monochrome cameras Hitachi KP-M1U and 1 color camera Hitachi VK-370;

- 2 PCs Pentium 166 MHz, 4 Gb HD SCSI, 32 Mb RAM,

CD-ROM

8x, tube monitors 17';

- Head TRC-Helpmate Bi-Sight;

- VCR and tube TV;

- Video acquisition board FAST with a special purpose MPEG decompression chip;

- Servocontrolled lenses Fujinon H10x11E-MPX31 and image acquisition boards DT3552.

History: the Computer Vision and Active Perception lab (1995-1999):

"...robust solutions to the vision problem did not materialize, mainly

because Marr has left out of his theory a very important fact: that all

visual systems in nature, from insects to fish, snakes,

birds, and humans, are active. By being active, they control the process of image acquisition in space and

time, thus introducing restrictions that facilitate the

recovery of information about the three-dimensional world (reconstruction).

"I move, therefore I see" is a fundamentally true statement. By becoming

stationary, the human eye loses perception. "

Y. Aloimonos (1993)

Computer

Vision is a science based on theoretical foundations and requires

experiments to validate a theory and/or algorithm. Thus, coupled with

the intent of studying task-oriented visual perception (purposive

vision) and visual feedback, vision algorithms were tested in a

binocular vision head capable of changing the image acquisition

parameters in real time.

Imaging became a dynamic process to be controlled in accordance with

the visual information already obtained and still to extract from the

next images. The visual information from the computer vision algorithms

were employed to control the pose of the head, the vergence angles of

the cameras, the focal lengths, focus, and lenses' apertures through

activation signals sent by a network of computers running the vision

and control algorithms in real time. It should be noted that the computing power of

the PCs then used, Pentium 166 MMX, was quite limited.

The lab, at the time,

investigated techniques for the identification, estimation, and control

of the vision head, calibration of vision systems, and computer vision

algorithms aiming at the implementation of visual behaviors for:

-Automation of processes wherein vision is a sensor;

-Vision-aided inertial navigation;

-Target tracking;

-3D reconstruction from stereoscopic vision, egomotion, and texture.

back

Course work:

EE-292:

Visão Computacional para Controle de Sistemas (1995-2000)

ELE-82: Aviônica

(2000-2014)

ELE-26: Sistemas Aviônicos

EES-60: Sensores e Sistemas

para Navegação e Guiamento

EE-294: Sistemas

de Pilotagem e Guiamento

EE-295: Sistemas

de Navegação Inercial e Auxiliados por Fusão Sensorial

back

Equipment:

- Airframe Hangar 9 model Alpha 60 with various internal combustion engines;

- Electrically powered sailplanes;

- Commercial autopilots for control, guidance, and navigation of the above mini-UAVs;

- Tools and machine shop for simple repairs and manufacturing;

- 3D printer;

- Distinct 3-gimballed imaging pod prototypes;

- Computing

resources, embedded implementations included, and software for the

development of real-time navigation systems, computer vision, and

sensor fusion;

- Analog and digital cameras and servocontrolled lenses;

- Telemetry, telecommand, and video links;

- Various low-cost IMUs with integrated GPS receiver, altimeter, and magnetometer;

- GPS receivers;

- Magnetometers.

inicio

Publications

Viegas, W. da V.C. ;

Waldmann, J.; Santos, D.A.; Waschburger, R. Controle em três eixos para

aquisição de atitude por satélite universitário partindo de condições

iniciais desfavoráveis. Controle & Automação (Impresso), v. 23, p.

231-246, 2012.

Ferreira, J.C.B.C.;

Waldmann, J. Covariance Intersection-Based Sensor Fusion for Sounding

Rocket Tracking and Impact Area Prediction. Control Engineering

Practice, v.15, p. 389-409, 2007.

Waldmann, J.

Feedforward INS aiding: an investigation of maneuvers

for in-flight alignment. Controle & Automação (Impresso), v. 18, p.

459-470, 2007.

Waldmann, J.

Line-of-Sight Rate Estimation and Linearizing Control of an Imaging

Seeker in a Tactical Missile Guided by Proportional Navigation. IEEE

Transactions on Control Systems Technology, v.10, n.4, p. 556-567,

2002.

Caetano, F. F.;

Waldmann, J. Attentional

Management for Multiple Target Tracking by a Binocular Vision Head.

SBA. Sociedade Brasileira de Automática, Campinas, v.11, n.3, p.

187-204, 2000.

Viana, S. A. A.;

Waldmann, J.; Caetano,

F.F. Non-Linear Optimization-Based Batch Calibration with Accuracy

Evaluation. SBA. Sociedade Brasileira de Automática, Campinas, v. 10,

n.2, p. 88-99, 1999.

Waldmann, J.;

Bispo, E. M. . Saccadic Motion Control for Monocular Fixation in a

Robotic vision Head: A Comparative Study. Journal of the Brazilian

Computer Society, Campinas, v. 4, n.3, p. 61-69, 1998.

Chagas, R.A.J.; Waldmann, J. A Novel

Linear, Unbiased Estimator to Fuse Delayed Measurements in Distributed

Sensor Networks with Application to UAV Fleet. Springer, 2013 (Edited

Volume -Bar-Itzhack Memorial Symposium on Estimation, Navigation, and

Spacecraft Control - Daniel Choukroun (Ed.)).

Chagas,

R.A.J.; Waldmann, J. A Novel Linear, Unbiased Estimator to Fuse Delayed

Measurements in Distributed Sensor Networks with Application to UAV

Fleet. Itzhack Y. Bar-Itzhack Memorial Symposium on Estimation,

Navigation, and Spacecraft Control, 2012, Haifa, Israel.

Chagas,

R.A.J.; Waldmann, J. Observability Analysis for the INS Error Model

with GPS/Uncalibrated Magnetometer Aiding. Itzhack Y. Bar-Itzhack

Memorial Symposium on Estimation, Navigation, and Spacecraft Control,

2012, Haifa, Israel.

Chagas, R.A.J.; Waldmann, J. Geometric Inference-Based Observability

Analysis Digest of INS Error Model with GPS/Magnetometer/Camera Aiding.

19th Saint Petersburg International Conference on Integrated Navigation

Systems, 2012, São Petersburgo, Rússia.

Lustosa, L.R.; Waldmann, J. A novel

imaging measurement model for vision and inertial navigation fusion

with extended Kalman filtering. Itzhack Y. Bar-Itzhack Memorial

Symposium on Estimation, Navigation, and Spacecraft Control, 2012,

Haifa, Israel.

Cordeiro,

T.F.K.; Waldmann, J.. Covariance analysis of accelerometer-aided

attitude estimation for maneuvering rigid bodies. VII Congresso

Nacional de Engenharia Mecânica CONEM 2012, Sao Luis - MA.

Godoi,R.G.;

Waldmann, J. Sistema de Controle de Atitude Para Satélite Estabilizado

em 3 Eixos com Rodas de Reação. VII Simpósio Brasileiro de Engenharia

Inercial, 2012, São José dos Campos, SP.

dos Santos, S. R.

G. ; Waldmann, J. Desenvolvimento de um Ambiente de Teste para

Implementação em Tempo Real de Sistema de Controle de Atitude. VII

Simpósio Brasileiro de Engenharia Inercial, 2012.

back

Staff and collaborators (setembro 2014):

- Jacques Waldmann:

Professor - Head - ITA

- Raul Ikeda

Gomes da Silva:

Professor - INSPER

- Rafael Marcus Contel Coutinho

Technician

- Leonardo Teles da Silva

Technician

- Marcus Vinicius da Costa Ramalho

Aeronautical Engineer - Collaborator - Itamaraty

- Maurício

Andrés Varelas Morales

Professor - Collaborator - ITA

- Sérgio Frascino Müller de Almeida

Professor - Collaborator - ITA

- Thiago Felippe Kurudez Cordeiro

Professor - Collaborator - UnB

- Ronan Arraes Jardim Chagas

D.C. Researcher - Collaborator - INPE

- Tiago Bücker

M.C. Electronic Engineer - Collaborator

back

Photos/Videos:

First configuration used for monocular vision tracking with pinhole

microcamera Pacific VP-500 and lens with focal length 3.7mm. (Dec/96).

First configuration used for monocular vision tracking with pinhole

microcamera Pacific VP-500 and lens with focal length 3.7mm. (Dec/96).

Algorithm

1: Motion detection, gray level segmentation and motion centroid

estimation. Requires a background with a quite homogeneous texture. (AUTOTR-N.MPG

- 525 KB)

Algorithm

2: Compensates the background motion induced by camera tracking motion

by means of measurements from encoders attached to the head axes,

morphological filtering (erosion followed by dilation), motion

segmentation, and motion centroid estimation. Requires knowledge of the

focal length of the lenses.( EDVATR-N.MPG

- 1.7MB)

Configuration

used for vergence, pan, and tilt control of the stereoscopic vision

head, switching between the video signal sources, and development

of a graphical user interface.( Jul/96 - Mar/97)

Configuration

used for vergence, pan, and tilt control of the stereoscopic vision

head, switching between the video signal sources, and development

of a graphical user interface.( Jul/96 - Mar/97)

Video presenting the graphic user interface, and the pose and angular velocity control modes. (CNPQ-S.MPG

- 39.7MB)

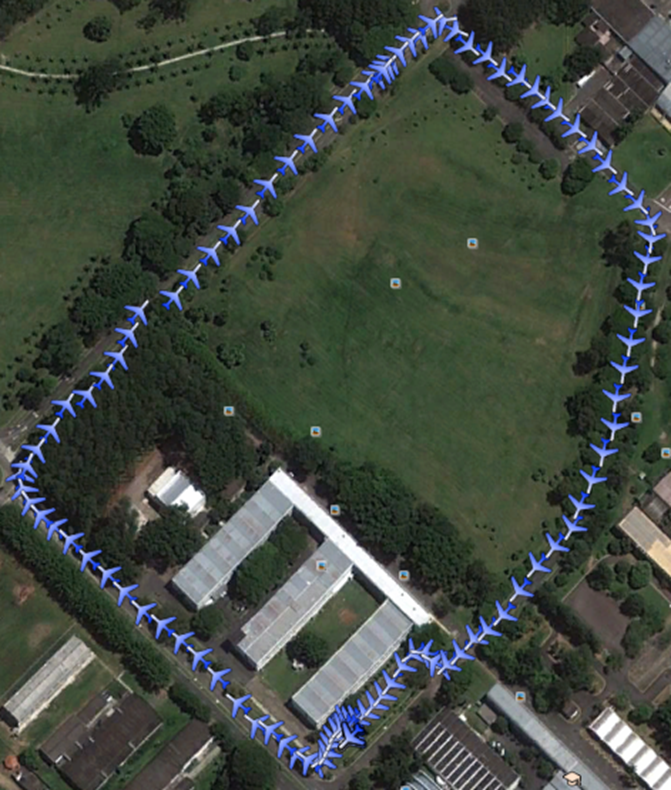

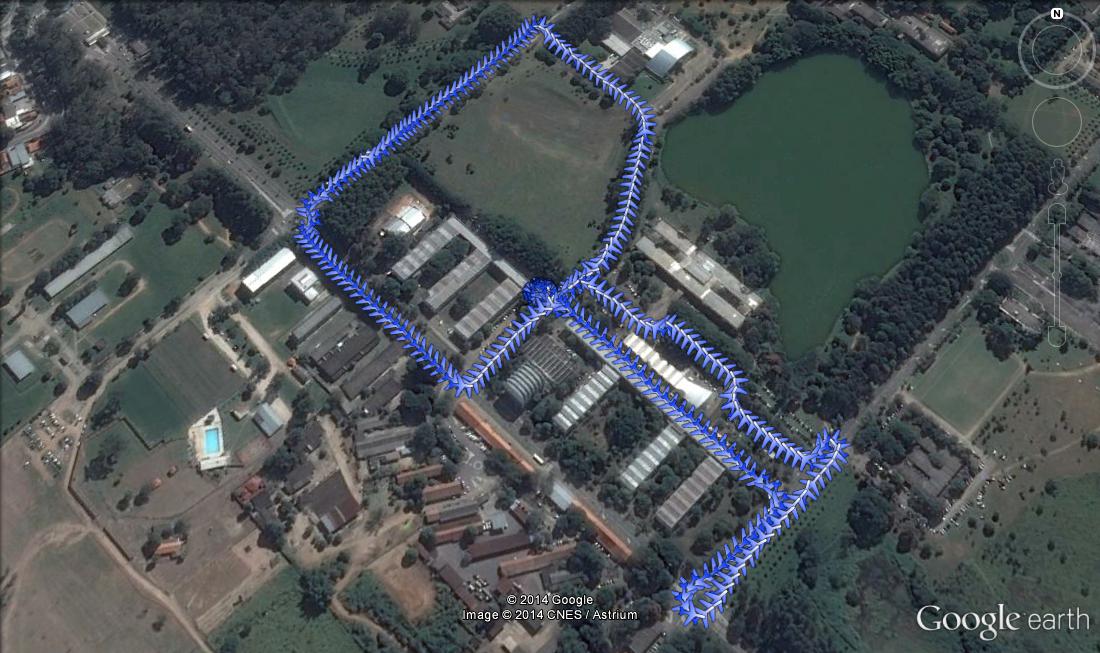

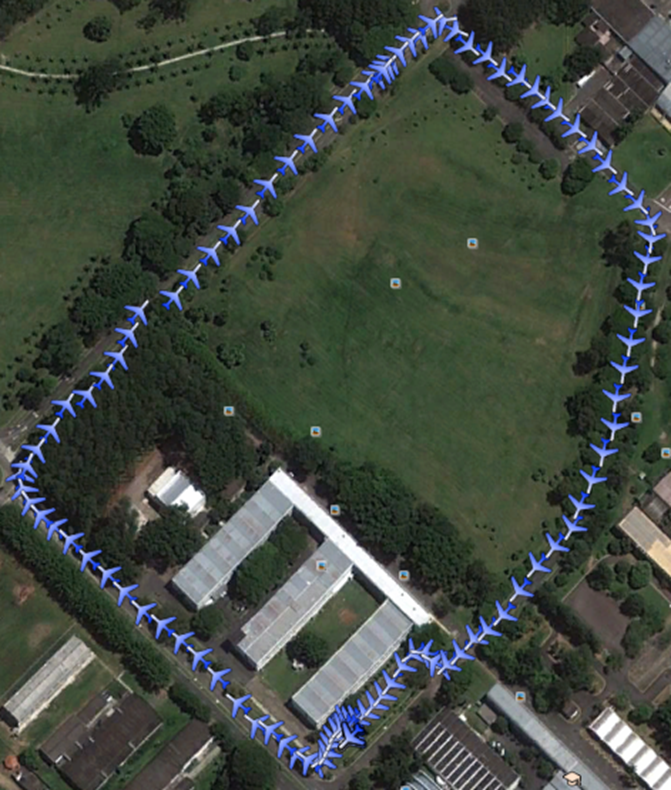

Test platforms: UAVs and bicycle instrumented for INS/GPS/magnetometer/camera integration

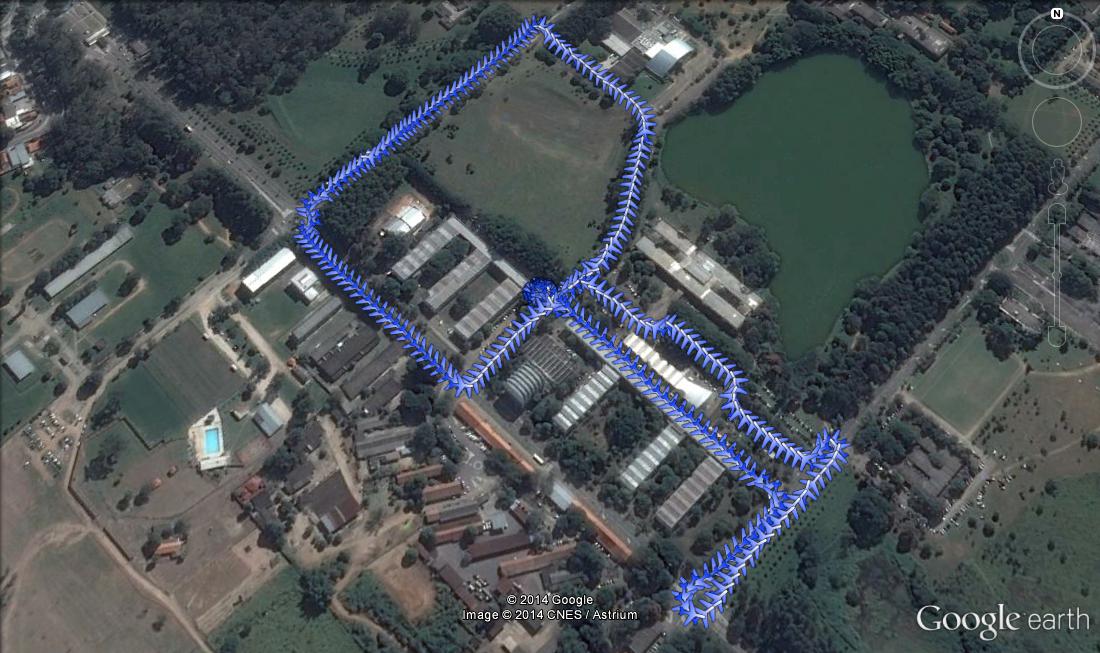

Results of

inertial navigation aided by INS/GPS/magnetometer integration - various

experiments with the sensor suite undergoing motion through ITA campus

either on foot or by bicycle on a helmet:

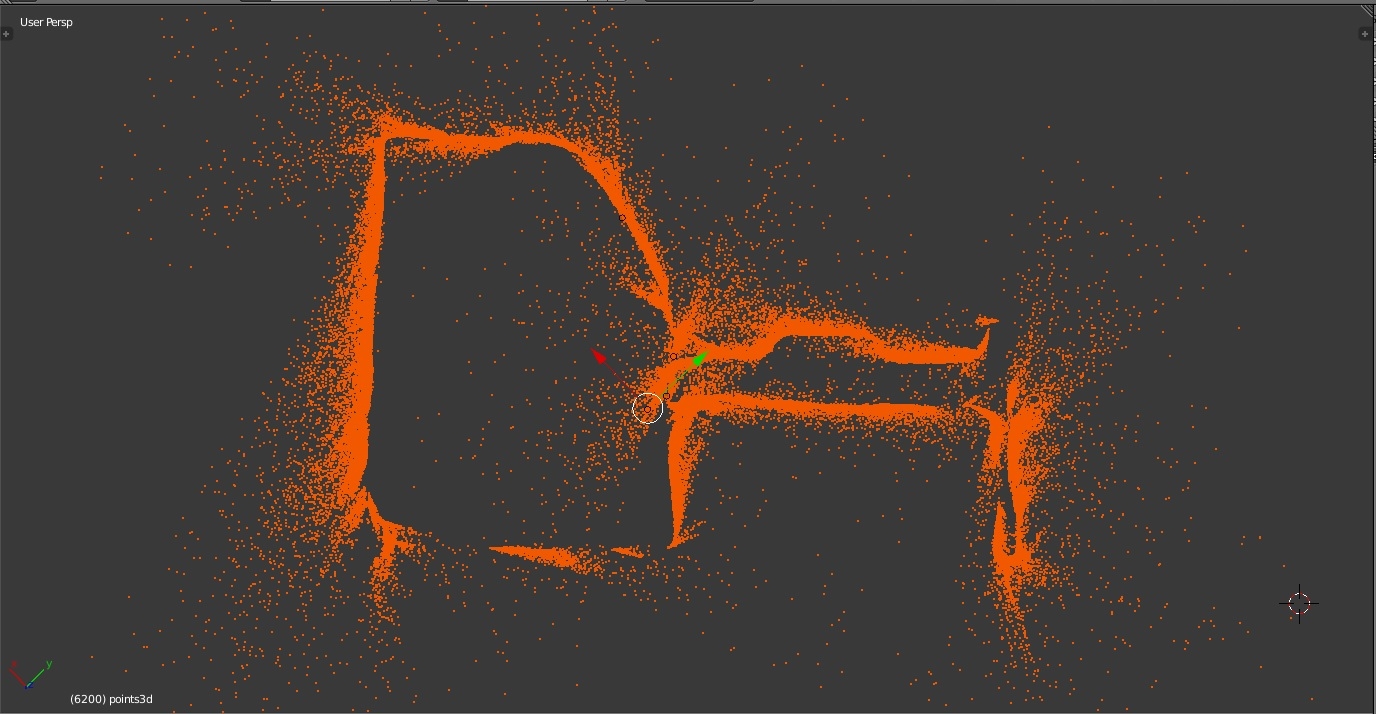

Top view of a

3D reconstruction of the structure as viewed from the camera on the

helmet during inertial navigation aided by INS/GPS/magnetometer

integration. The optical flow is assumed as arising solely from the

camera egomotion (the scenario is assumed static).

Images with

moving cars, cyclists, or pedestrians with respect to the otherwise

static scenario were manually discarded when computing the structure

from motion (SFM) solution.

Video rendering of the optical flow-based SFM

solution as viewed from the cyclist helmet camera during

GPS/magnetometer aided inertial navigation in a 5-minute

experiment.

Octree-based visualization with 1m-edge cube

voxels, and departing from the reference frame origin marked with the

white circle in the above image.

Altitude differences displayed with varying colors.

Cyclist path starts toward the mess hall,

completes the lap traversing the area between ITA and the mess hall,

then turns right to ride down the street where the Dean's office is

located, turns right and follows straight until the U-turn, turns 180

degrees, follows straight, turns left and rides up the ramp toward the

parking lot between the ELE/COMP building and the library, and finally

returns to the starting point.

The distinct voxel colors at the

region of the starting point are due to the changing GPS satellite

geometry as the satellites follow their orbital paths in the sky during

the experiment, thus producing altitude estimates at the same location

differing by about 10m and affecting the 3D reconstruction.

ExperimentoINS-GPS-FluxoOptico_reconstr3DAbril2015.mp4

Vídeo

captured by the 3-gimballed imaging pod Mk03 conceived, designed, 3D

printed, integrated with the sailplane, and tested in the lab -

equipped with an analog Sony Block IXA camera and operating in

gyrostabilized mode:

imageador 02.wmv

3D design of sailplane with retractable imaging pod.

MITSA-based target tracking and visual servoing by the pan/tilt step motors in a more recent imaging pod setup:

Rastreio20150602.avi

fc2_save_2015-06-16-170727-0000.mp4

Optical flow-based image stabilization. Video acquired while cycling at ITA campus with digital PointGrey Firefly monochrome camera on the helmet. Bicycle instrumented for video acquisition and with a video link to a remote station.

Biological drive

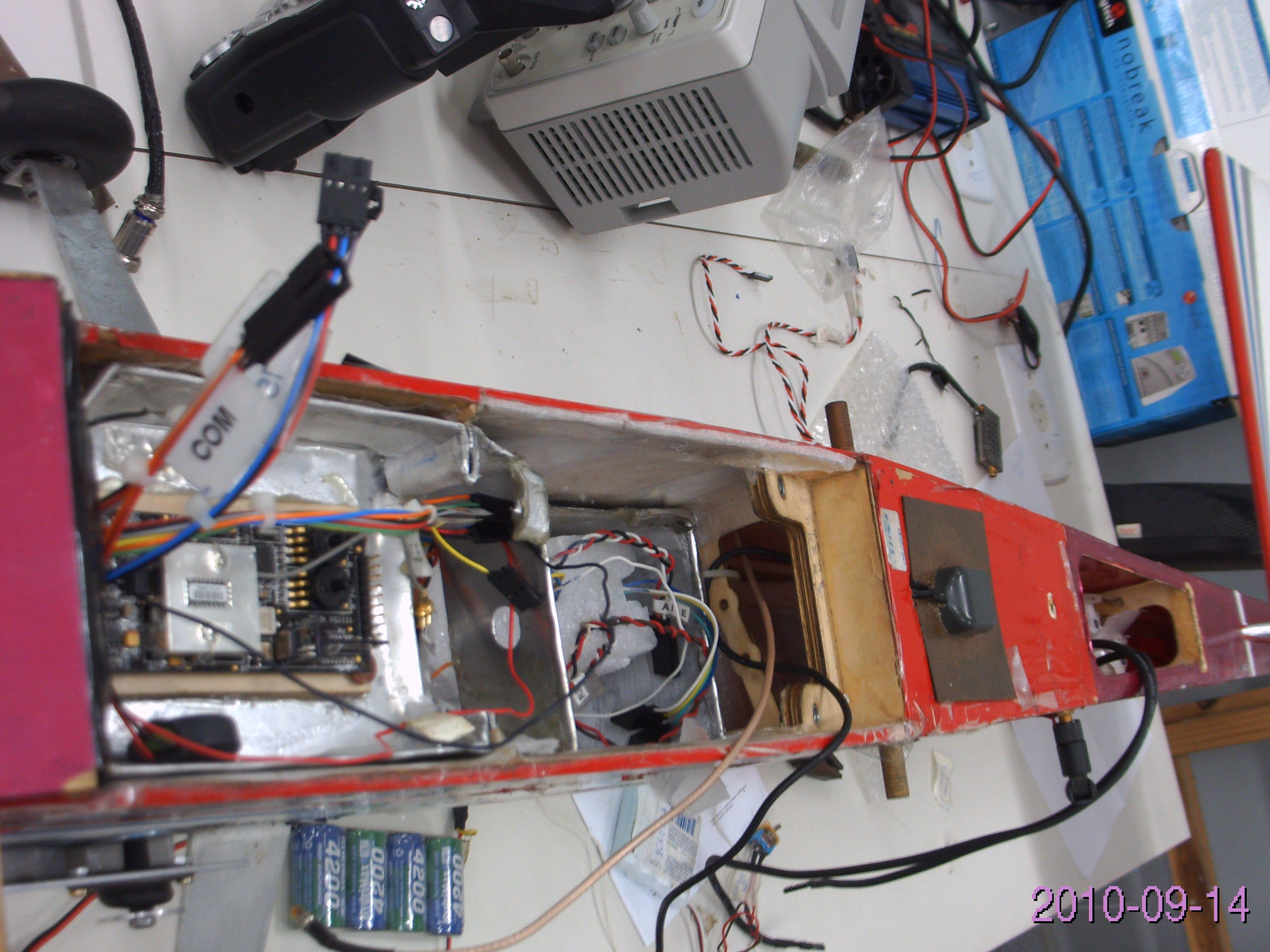

Video of an earlier 3-gimballed gyrostabilized imaging pod prototype - October 2010

First imaging pod prototypes - CNC-manufactured in polyacetal:

Former prototype with mechanical damping to mitigate vibrations from the internal combustion engine:

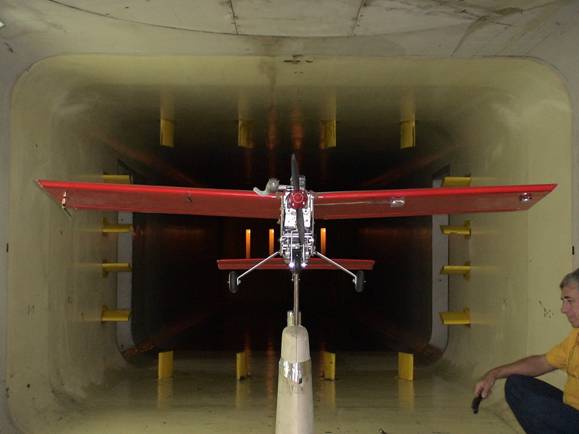

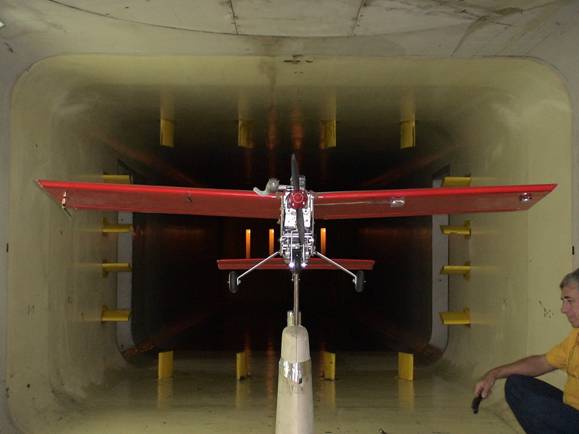

Wind tunnel tests at Instituto de Aeronáutica e Espaço (IAE)

Site in construction - coming next: test flights videos and images

back

[Scope and objectives] [Active vision head]

[History] [Course work]

[Equipment] [Publications] [Staff and collaborators][Photos/Videos]

|

Última atualização:

|

Página construída por:

|

|

28/setembro/2014

|

JW

|